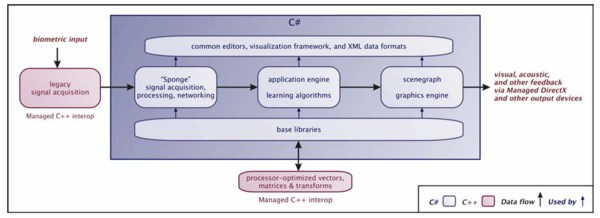

Symphony is a framework and suite of tools that allows for the design, prototyping and implementation of applications that interpret and visualize a variety of biological signals in real-time. Symphony integrates a real-time signal processing framework, a 3D visualization package, and the foundation required to rapidly develop diverse applications.

The MindGames group at MIT MediaLabEurope used Symphony to build a number of applications, some of which went on to be used in clinical, rehabilitation and even performing arts settings. Components of Symphony were also being used by several other research groups at the lab. One group shipped a Symphony-built project to NASA. Symphony was selected in 2004 to be the focus of a Microsoft Global Case Study for its innovative end-to-end use of the .NET Framework. Components of Symphony have also been used by:

- The Affective Computing Group, MIT Media Lab

- The Palpable Machines Group, MIT MediaLabEurope

- The Anthropos Project, MIT MediaLabEurope

Sample Applications

Here are some examples of real-time applications that have been developed with Symphony. Other applications in development with Symphony when MediaLabEurope closed its doors included Peace Composed and Aura Lingua.

Mind Balance

Goal: Real-time non-invasive acquisition of electroencephalogram signals from the surface of the head to control a character

Challenges:

- Precise rendering required to produce signals that can be detected as brainwave activity

- Research and development of signal processing occurring in parallel with app development.

Render speed required: 60 fps (minimum)

Render speed achieved: 100 fps+ (on conventional hardware)

Additional information:

- Uses Sponge to separate signal processing and rendering tasks.

- Uses native code to interface with legacy signal acquisition software.

- Currently being extended by researchers with no prior C# experience.

Developed with:

- MindGames group and University College Dublin Elec. Eng. Dept.

For more information: Mind Balance page

Still Life

Goal: “Magic Mirror” application used in physiotherapy and live performance environments.

Challenges:

- Processor-intensive real-time video analysis

- Control system for use in performances

- Reliability and reporting in clinical environment

Render speed required: 20 fps (minimum)

Render speed achieved: 20 fps-40fps (depending on mode, using conventional hardware)

Additional information:

- Uses Sponge to record videos of performances

- Showcased in the Féileacán project at the closing ceremonies of AAATE2003, September 2003, Dublin.

- Provided the complete interactive technical component of the Anima Obscura performance at the United Nations World Summit on the Information Society, December 2003.

Developed with: MindGames group, Dublin’s Central Remedial Clinic, CounterBalance and SmartLab

For more information: Still Life page

Signal Processing

An important component of Symphony is a real-time signal processing framework that has been used in our applications to process everything from live video to brainwaves. The framework provides a visual interface that allows a designer to drag and drop atomic signal processors that perform operations ranging from wavelet transforms to image differencing. These can be assembled into real-time signal processing networks that are used by an application. Relax to Win PocketPC running under MiniSymphony on an iPAQ 3870.

MiniSymphony

MiniSymphony is a version of Symphony that runs on the .NET Compact Framework platform. It was created by removing Symphony’s dependency on a couple of native libraries, and whittling away a bit at its Visualizers. In a matter of days, the first version of Relax To Win PocketPC (without biometric input) was implemented using MiniSymphony. There were plans within the group to port the biometric input to Relax to Win into a new portable version of the game using MiniSymphony.

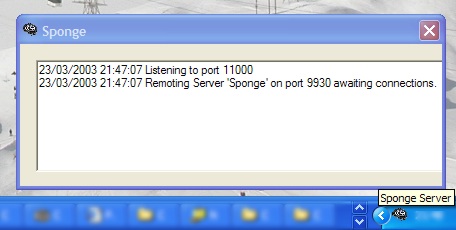

Sponge

One aspect of the signal processing framework, nicknamed Sponge, provides the ability for Symphony to distribute signal processing across a Local Area Network. Sponge uses WMI technology to query other computers on the LAN that are running a sentinel program, and determine what processor and memory resources they have available. Then, using .NET Remoting, Sponge can connect to chosen machines and deploy signal processing tasks. The original intention of Sponge was to facilitate system designs where signal acquisition and processing are performed on potentially unspecified computers, in a dynamic LAN environment. Sponge has allowed for this, and also offered some tremendous fringe benefits. For example, Still Life can use Sponge to record a live video of a performance by streaming screenshots to another computer over the LAN in real-time. These can be processed off-line to turn them into a moving video file.

Codezone article

I recently wrote an article describing Symphony for Microsoft’s CodeZone Magazine (in the context of some of the group’s recent work: the Féileacán Project, Still Life, Mind Balance and the venerable Relax-to-Win). I have two PDF versions of the article online: a scanned copy of the article, and a printer-friendly version that lacks CodeZone’s superior formatting. (Note: the engine was named Symphony a matter of days after that article went to press.)

Current Status of Symphony

Symphony was developed between 2002 and 2004 at the MIT MediaLabEurope, which has since closed its doors.

Since leaving the lab, I independently developed a successor to Symphony. Work was postponed on that codebase before I joined Microsoft’s Developer and Platform Group. If you have any questions about Symphony, or are interested in leveraging a visualization and signal processing engine, please don’t hesitate to contact me.