Imagine a world where your brainwaves offer you another degree of freedom in a control system — and think of how useful that freedom would be for someone who can’t use a conventional controller like a mouse.

We can’t lift starfighters with Jedi Mind Tricks just yet, but the MindGames group at MIT’s MediaLabEurope took strides in that direction through the non-invasive real-time analysis of human brainwaves.

Mind Balance was the first application developed by the MindGames group as part of an ambitious collaboration with researchers at University College Dublin to implement new brain-computer control interfaces.

Taking the Mawg for a Walk

In Mind Balance, a participant must assist a tightrope-walking (apparently Scottish) behemoth known as the Mawg, by helping him keep his balance as he totters across a cosmic tightrope. All in a day’ work for a typical computer gamer – but a participant at the helm of Mind Balance has no joystick, no mouse, and not even a camera – only a brain cap that non-invasively measures signals from the back of the head.

Specifically, the cap monitors electrical signals from the surface of the scalp over the occipital lobes (just above the neck). The occipital lobes are the home of the brain’s visual processing, and they sport an effectively direct connection to the eyes via the brain’s optical nerve. When the participant stares at regions on the screen that are blinking at known frequencies, their brain processes that blinking in enigmatically complex ways. But one side-effect of that processing – an increase in electrical activity at the same frequency as the blinking orb – is sufficiently pronounced that it can be detected in the electromagnetic soup at the surface of the head. These detectable artifacts are called Visually Evoked Potentials, or VEPs.

If the Mawg slips to the right, the participant can help shift the creature’s balance back to the left by staring at the orb flickering on the left-hand side of the screen. The subsequent change in brainwave electrical activity is detected by the system as a VEP, and transformed into a one-dimensional analog control axis that can be used to get the Mawg back on track.

Technical Overview

All of this requires some fairly fancy graphical and signal processing footwork. In order that the blinking of the orbs produces a signal that can be reliably detected, the orbs must be rendered at a consistent 60 frames-per-second or more. Symphony’s C# graphics engine and scenegraph is capable of rendering the orbs, together with the animated Mawg and his environment, at over 100 fps on conventional hardware running Windows XP.

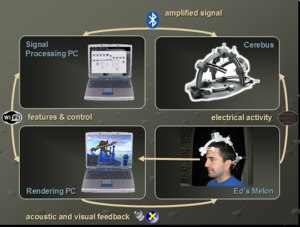

It would certainly be possible, given performance figures like these, to perform signal acquisition and processing on the same PC that is rendering the graphics. But in order to facilitate rapid development, and decouple the signal acquisition and processing steps from the actual gameplay, we used the Sponge signal processing framework (a component of Symphony) to offload signal processing to another PC. On that signal acquisition PC, the electrical signals are acquired from the brain, VEPs are detected, and the left-right feature is extracted. That simple feature is then sent across the network to the computer that controls the Mawg and renders the Mind Balance world.

It’s a comparably simple step to take the Mind Balance technology and add another axis, thereby turning it into a two-dimensional controller. And another technique currently under development involves the observation of imagined muscle movements. Instead of staring at the blinking orb, using this technique you would just imagine moving your left hand, and the character would move left. So although today we’re just taking the Mawg out for a walk, tomorrow we may be making Jedi Mind Tricks a reality!

Answers to some questions

1. Do you need a lot of complicated training to use Mind Balance?

No. A new player only requires a 45-second training phase that uses acoustic feedback. During that time, both the participant and the system are being “trained.” The system determines the unique baseline EEG patterns that it can expect from the participant, and the participant discovers what constitutes a “good stare,” because the steady-state VEP feature their brain produces is governed more by focus-of-attention than it is on what objects are present in their field of view.

2. Is the technology experimental only? Is it ready for the market?

Currently, the technology is in a prototype phase, but there is no reason why it couldn’t eventually find its way to the market. The Symphony software architecture runs on conventional hardware, and we often ran Mind Balance on a laptop. The Cerebus hardware device is patented, and it was our hope that it will move beyond the experimental phase. I’m afraid that with the lab now closed, we can’t be more specific about the timeline!

High Resolution Images

Can be found here as a compressed zip file, or here on my Flickr photostream.

Special thanks

Mind Balance was developed by the MindGames Group at MIT MediaLabEurope, in collaboration with our colleagues at University College Dublin, including Simon Kelly and Ray Smith of the University College Dublin Elec. Eng. department.